The pivotal role of voice

In a recent conversation with a fellow founder, he mentioned the persistent challenges of knowledge transfer within startup teams. It’s a familiar struggle—extracting the wealth of knowledge residing in founders’ minds and sharing it effectively with the rest of the members. It is impractical and impossible to expect them to jot down every detail on paper or a computer. However, recording thoughts via Dictaphone or mobile voice records is perhaps not such a daunting task.

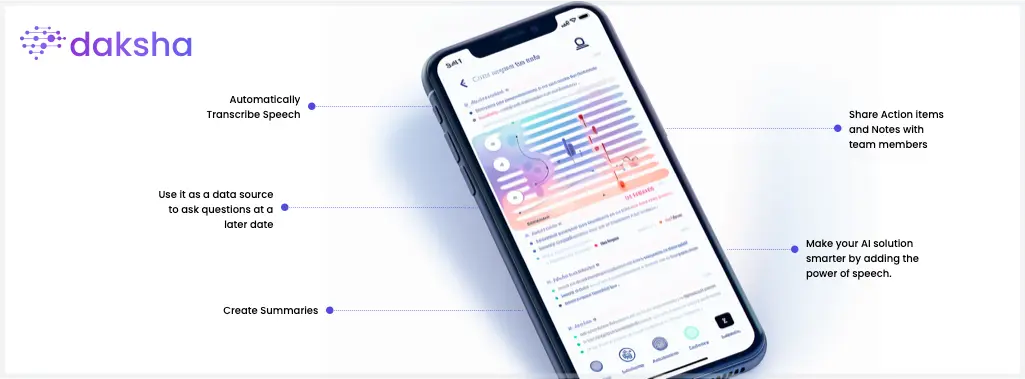

This is just one example to illustrate the pivotal role of voice as a primary communication medium for humans. Audio is one of the most crucial data formats for some organizations. It could be meeting recordings, speeches, public domain audio clips, and more. Any data-driven AI solution falls short unless it adeptly handles speech.

Speech, as the fundamental mode of human communication, adds a layer of richness that text, graphics, and other data sources can’t fully capture. The variations in tone, pitch, and intonation convey a spectrum of emotions, intentions, and nuances that written or non-verbal communication often struggles to express.

This recurring problem poses a challenge for many businesses. The richness of a spoken conversation often gets lost in transcription, and inaccuracies can lead to misinterpretations or missed opportunities. At Daksha, we’ve experienced this firsthand when internally communicating insights and action points. Fortunately, with the advancements in modern AI frameworks, this challenge is not as formidable as it once was a few years ago.

The Evolution of Transcription Tools:

In the early days, transcription was a manual, labor-intensive process. As technology advanced, voice recorders became a godsend, but the issue of playing back lengthy files persisted. The next leap? Automated transcription. The initial software solutions were decent but imperfect. The latest advancements, AI-powered tools, promised higher accuracy and speed. But the question loomed: which tool stands out? We looked at some of the more interesting options out there and here is what we concluded from our own experiments.

Set-Up of The Experiment:

To have some comparable results, we used a standard length of business calls with several people. The meeting we used was around 23 minutes and had a few challenging transcription aspects, such as complicated names, different accents, and sometimes difficult to understand audio.

With this somewhat challenging audio, we started the experiment with different transcription frameworks. Below, you can see the name of the transcription AIs we used as well as the time they took to transcribe the audio file.

The transcription APIs we used:

| Case No. | Web Tool Link | Model Used | Audio Length | Transcription Time | Noteworthy Findings |

|---|---|---|---|---|---|

| 1 | Replicate’s Whisper | Whisper-large-v2 | 23m 26s | ~5 min | Has correct spelling for names like “Nova”. More words/lines compared to Whisper-medium. |

| 2 | Deepgram’s Whisper-medium Playground | Whisper-medium | 23m 26s | 33s | Similar to Whisper-large but may miss some names/words. |

| 3 | Deepgram’s Nova Playground | Nova | 23m 26s | 13s | Mistakes like “lastly” to “costly”, “these” to “AEs”. Adjusts sentences based on sense. |

| 4 | Deepgram’s Nova-2-ea Playground | Nova-2-ea | 23m 26s | 19s | Has distortions like Nova model. For instance, “look at it together” becomes “look at Did you get a few?”. |

| 5 | Used curl API call to Deepgram’s Whisper-large | Whisper-large | 23m 26s | ~10s | Better punctuations with Deepgram. Replicate’s model adjusts sentences for better grammar. Examples: “Dibram” vs. “Deepgram”, “EPA” vs. “API”. |

| 6 | Live streaming with Deepgram | Meeting (Enhanced Tier) | 23m 26s | ~1-2s per chunk | Some mistakes in non-casual words, e.g., “Whisper” to “HISPER”. Sometimes skips words. |

| 7 | DeepSpeech | Local deployment | 23m 26s | ~2 min | Very quick, but less accurate than Whisper. More wrongly identified words, even though it handles jitter very effectively |

| 8 | Google Cloud Speech-to-Text API | 23m 26s | ~4 min | Troubles dealing with accents, slow speed |

Findings

At first glance, each tool showcased commendable performance, making it seem like a tight race. However, the devil is in the details. The Whisper-large and Whisper-medium models stood neck-to-neck in terms of accuracy, but when the stakes were raised with nuanced contexts, especially in the realm of recognizing proper nouns like “Nova,” Whisper-large managed to shine just a tad brighter.

The Nova models, in their quest for speed, occasionally traded off accuracy. Words were at times replaced with phonetically similar counterparts, causing a hiccup in the intended meaning. A surprising revelation was how the platform affected the Whisper model’s performance. While the core technology remained consistent, its execution varied. Deepgram’s adaptation of the Whisper model displayed a remarkable blend of speed and contextual understanding, outpacing its counterpart hosted on Replicate.

In essence, while all tools brought something to the table, nuances in their performance underscored the importance of choosing the right tool for specific needs.

Drawing Conclusions:

The Whisper-large model emerged as our top recommendation, for now, especially when it comes from Deepgram. We were surprised that the Whisper Large model was so quick, even quicker than the Whisper Medium model. Therefore, the Whisper Large model, combining speed with precision, seems best equipped for the intricate world of business discussions.

Takeaways

The power of an effective transcription tool is transformative. No longer are the insights of a crucial meeting confined to the attendees. With the right tool, the essence of the discussion can be captured, shared, and revisited.

We recommend giving the Whisper-large model on Deepgram a shot. If you’re looking for a seamless start, we’ve got you covered with tools and resources. Here’s to making every audio’s insight accessible and enduring.

By Marvin C. Kunz -Marvin’s primary role at Daksha is to merge the wisdom of psychology with the potential of AI and Prompt Engineering. When he is not busy with that, you can find him talking to strangers about virually anything under the sun!